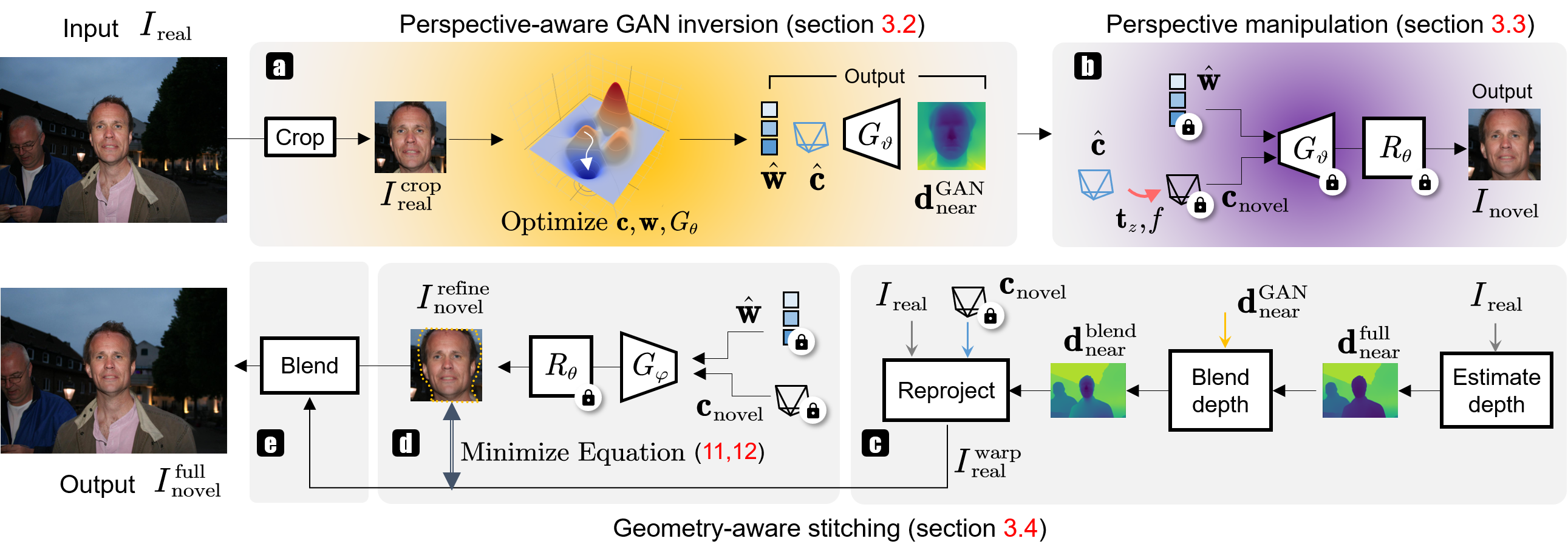

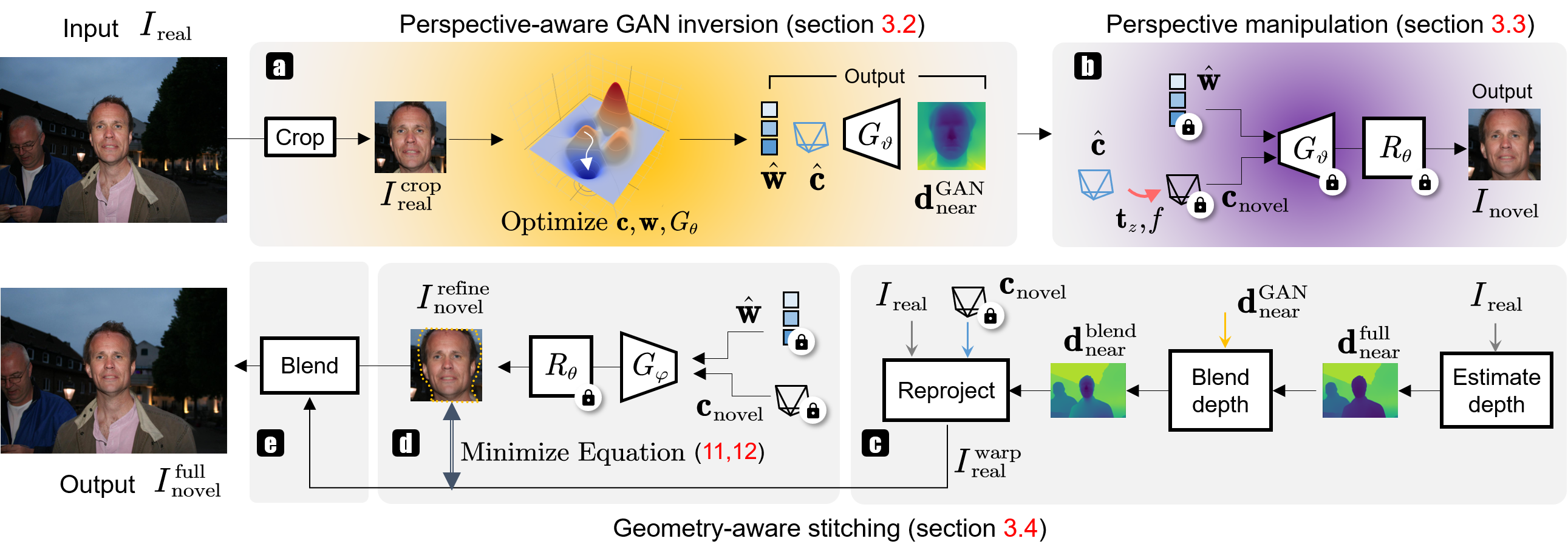

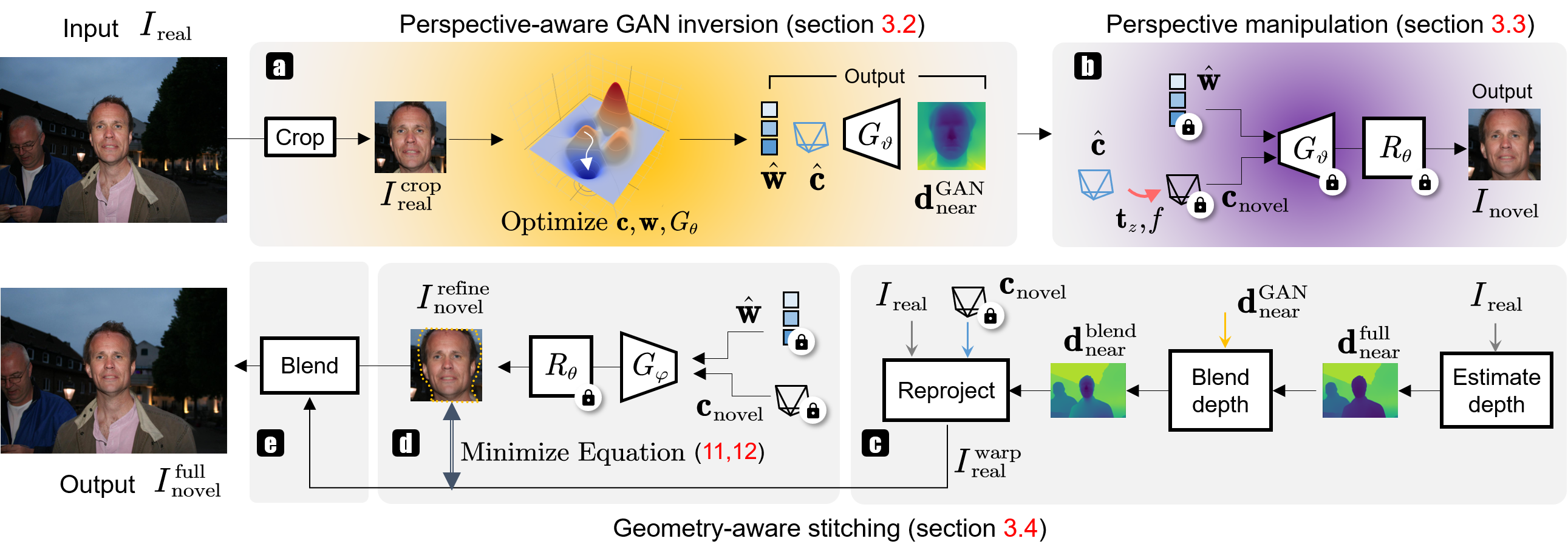

Method Overview

Portrait photos captured from a near-range distance often suffer from undesired perspective distortions. DisCO corrects these perspective distortions and synthesizes more pleasant views by virtually enlarging focal length and camera-to-subject distance.

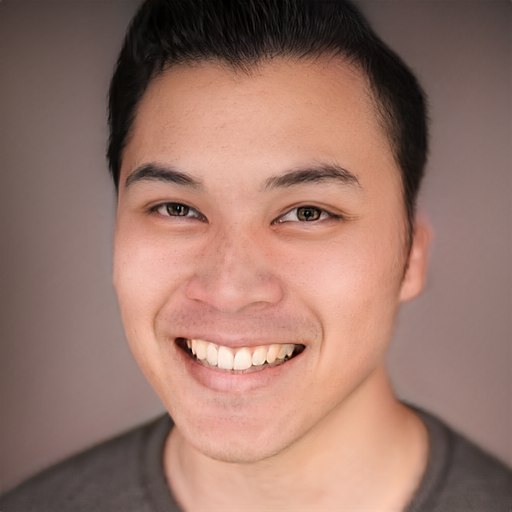

We show that our method can process diverse in-the-wild face images

Correct in-the-wild distorted images collected by us and by [Zhao+, ICCV'19]

Close-up facial images captured at short distances often suffer from perspective distortion, resulting in exaggerated facial features and unnatural/unattractive appearances. We propose a simple yet effective method for correcting perspective distortions in a single close-up face. We first perform GAN inversion using a perspective-distorted input facial image by jointly optimizing the camera intrinsic/extrinsic parameters and face latent code. To address the ambiguity of joint optimization, we develop starting from a short distance, optimization scheduling, reparametrizations, and geometric regularization. Re-rendering the portrait at a proper focal length and camera distance effectively corrects perspective distortions and produces more natural-looking results. Our experiments show that our method compares favorably against previous approaches qualitatively and quantitatively. We showcase numerous examples validating the applicability of our method on in-the-wild portrait photos. We will release our code and the evaluation protocol to facilitate future work.

Visual comparisons on our collected images

Visual comparisons on images collected by [Zhao+, ICCV'19]

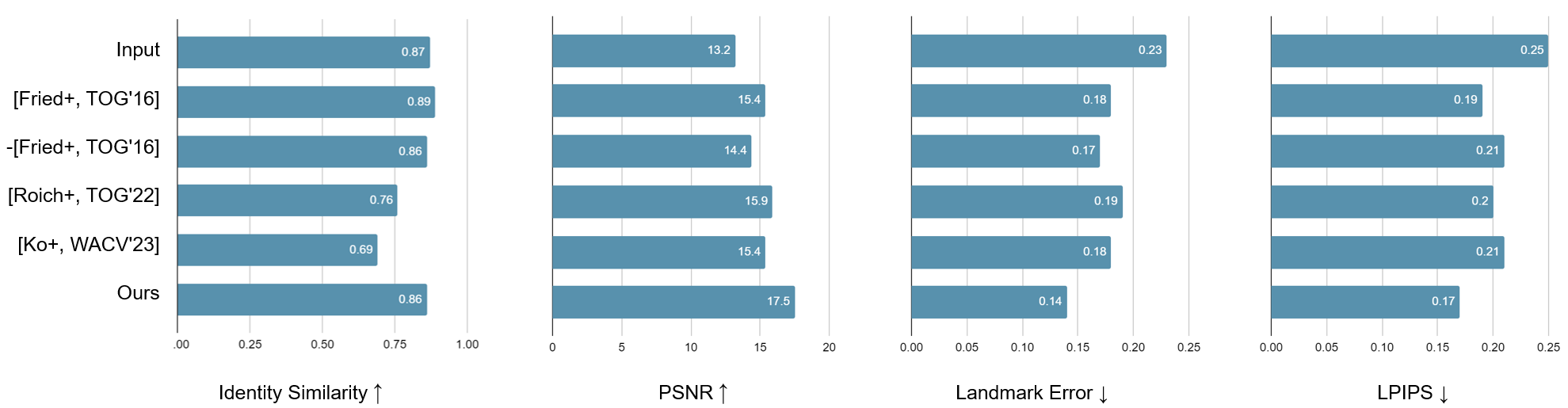

We conduct comparisons on CMDP. Results of [Fried+, TOG'16] is borrowed from their demo. -[Fried+, TOG'16] denotes our re-implementation of [Fried+, TOG'16].

Ablation study of our proposed perspective-aware 3D GAN inversion

Ablation study of our pipeline

@article{wang2023disco,

title={DisCO: Portrait Distortion Correction with Perspective-Aware 3D GANs},

author={Zhixiang Wang, Yu-Lun Liu, Jia-Bin Huang, Shin'ichi Satoh, Sizhuo Ma, Guru Krishnan, Jian Wang},

journal={arXiv preprint arXiv:},

year={2023}

}Special thanks to Yajie Zhao for providing their results and data; Ohad Fried for sharing their results on web. Our collected in-the-wild images are from internet under common creative. Sources are here.